Belief Space Planning under Epistemic Uncertainty for Learned Perception Systems

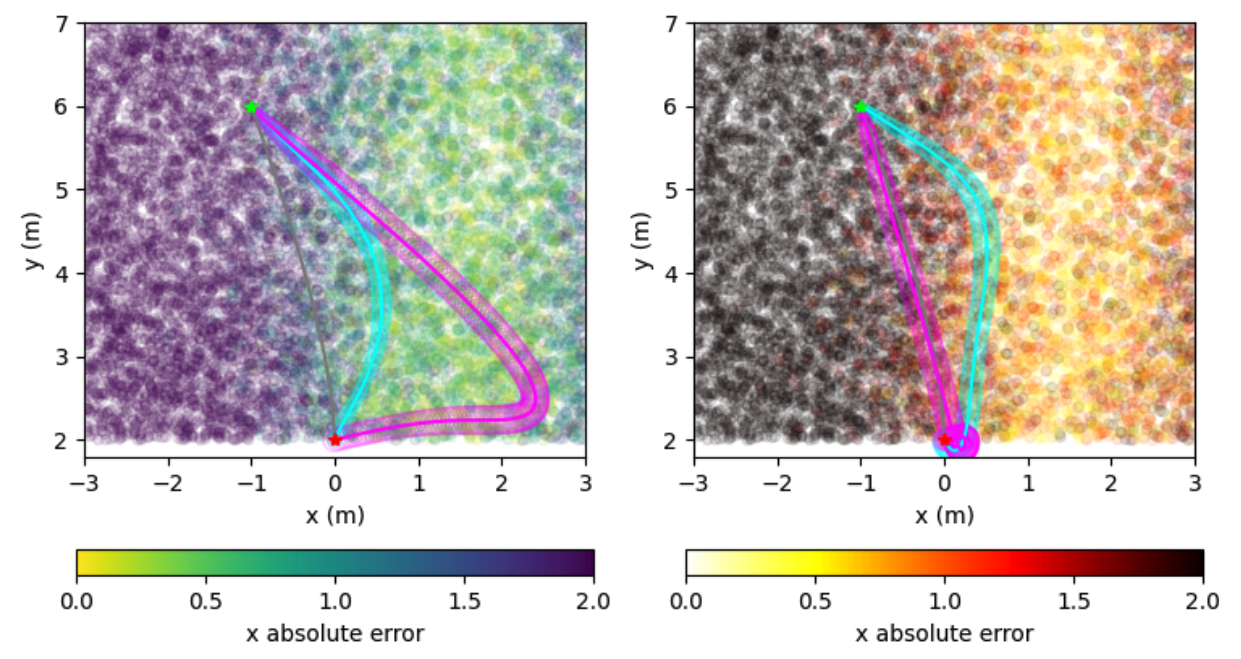

Learning-based models for robot perception are known to suffer from two distinct sources of error: aleatoric and epistemic. Aleatoric uncertainty arises from inherently noisy training data and is easily quantified from residual errors during training. Conversely, epistemic uncertainty arises from a lack of training data, appearing in out-of-distribution operating regimes, and is difficult to quantify. Most existing state estimation methods handle aleatoric uncertainty through a learned noise model, but ignore epistemic uncertainty. In this work, we propose: (i) an epistemic Kalman filter (EpiKF) to incorporate epistemic uncertainty into state estimation with learned perception models, and (ii) an epistemic belief space planner (EpiBSP) that builds on the EpiKF to plan trajectories to avoid areas of high epistemic and aleatoric uncertainty. Our key insight is to train a generative model that predicts measurements from states, “inverting” the learned perception model that predicts states from measurements. We compose these two models in a sampling scheme to give a well-calibrated online estimate of combined epistemic and aleatoric uncertainty. We demonstrate our method in a vision-based drone racing scenario, and show superior performance to existing methods that treat measurement noise covariance as a learned output of the perception model. Code can be found at https://github.com/StanfordMSL/Epistemic-Planning-Filtering.